The idea of robots learning body language is at once terrifying and potentially very cool.

On the one hand, the idea that there are these public-facing machines that can analyze subtle cues at work or in a social setting is unsettling.

We’ve all been subject to the amateur body language expert who likes to tell people how they feel. The worst, right?

Now, robots are increasingly learning more about our subtle facial expressions and closed-off stance. But it’s not all bad. See, this next step in understanding could make it easier to work with our future colleagues.

Here’s a little more about where we’re at with robots and body language.

The next phase in natural language processing

Natural Language Processing (NLP) is gaining a lot of traction these days. The market is expected to grow to over $16 billion by the year 2021 , as more businesses get wise to the benefits of NLP.

Much of this growth can be chalked up to marketing efforts — where companies who can afford it are incorporating customer service chatbots , mining reviews for sentiment, and collecting as much data as they can — faster than ever.

But, increasingly, researchers have started paying attention to body language, too. Humans communicate with more than the written word, and robots stand to help us more if they fully understand our language.

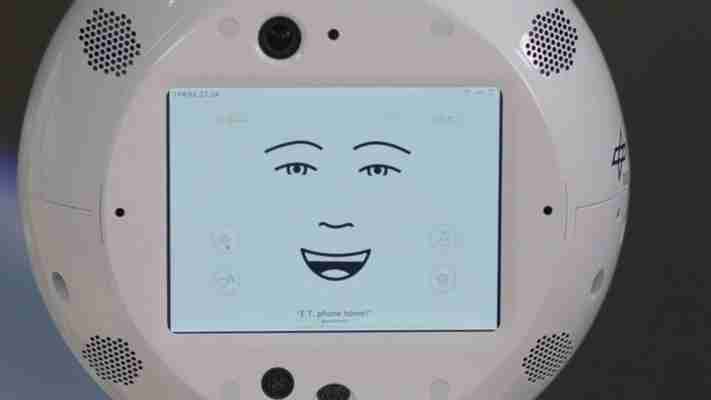

AI robot revolts on the International Space Station

Better collaboration is on the horizon

Robots are already in the mix. But, they’ve long been confined to performing pre-programmed functions. As Cornell engineering professor, Guy Hoffman told MIT’s Sloan Review , researchers are increasingly exploring how machines can work with us.

Hoffman says that subtle changes (like programming robots to nod when receiving instructions) can have a positive impact on the humans working with the machine. Whether we’re conscious of it or not, robots have the ability to affect our behavior.

So, programming some human-like behaviors into bots could give us more positive feelings about the changes associated with integrating AI into our lives.

How to succeed with a robot coworker

Artist Madeline Gannon has echoed this same idea, albeit in a different way. Gannon is an installation artist interested in how we interact with machines — which she refers to as “animals.” Through these interactive art projects, Gannon explores how humans and bots relate to one another, as well as how programming body language can help us become more comfortable with robots.

Essentially, Gannon programs robots to respond to human behavior—and while she admits it’s something of a one-sided relationship, that little tweak makes them more accessible — not necessarily a cold and mechanical job-stealing machine.

It’s essential that robot body language is used for good

This piece from Carnegie Mellon University looks at the process of training robots to recognize hand signals and other nonverbal cues. This technology can help machines detect the nuances that exist in nonverbal communications.

One key benefit is the potential use in group settings, allowing robots to detect peoples’ moods or determine whether it’s appropriate to interrupt someone.

There’s also the potential to help people with dyslexia or autism quickly analyze others’ behavior — picking up on cues they otherwise might not notice.

The article mentions that this technology could be used to enable new approaches to treating behavioral issues or conditions such as depression, as well.

Ultimately, this concept initially made us bristle, as algorithmic analyses have made their way into the job hunting process in some dark ways. If robots are scanning us — they better be friendly, right?

In any case, the idea of a friendly coworker is better than the one who doesn’t quite understand context or stares blankly when you say something. Whether we deal with humans or robots, we feel better when our conversation partner “hits the ball back.” Because we’re inevitably going to deal with machines in a more personal way, pre-programmed cues may be able to help us cope with the changes.