Many people likely know AWS as the world’s largest provider of cloud computing services. But far fewer people think of the Amazon subsidiary as a supercomputing heavyweight.

Predominantly, that’s because AWS is happy to operate at the less sexy end of the high-performance computing (HPC) spectrum, far from the shiny proof-of-concept systems that decorate the summit of the Top 500 rankings.

Instead, the organization is concerned with democratizing access to supercomputing resources, by making available cloud-based services that a large number of companies and academic institutions can access.

One of the people responsible for delivering on this objective is Brendan Bouffler, known to some as “Boof”, who in his role as Head of Developer Relations for HPC acts as the intermediary between customers and the AWS engineering team.

As someone with years of experience building supercomputers, he contends that, counter-intuitively, it is often the smaller-scale machines that have the greatest impact, because raw performance is not necessarily the most important metric.

“It is fun to design really big machines, because it’s a complex mathematical problem you have to dismantle,” he told us. “But I always got more joy out of building the smaller systems, because that’s where the largest amount of science is done.”

The epiphany at AWS was that this approach to HPC, whereby productivity takes precedence over performance, could be transplanted effectively into the cloud.

HPC in the cloud

Although large-scale supercomputers like Fugaku , which currently tops the performance rankings, are excellent examples of how far the latest hardware can be pushed, these systems are curiosities first and utilities only second.

As Bouffler explains, the fundamental problem with large on-premise machines is ease of access. A system capable of breaking the exascale barrier would be an impressive feat of engineering, but of diminished practical use if researchers must queue for weeks to use it.

“A lot of people who build supercomputers, myself included, fall into the trap of worrying about squeezing out an additional 1% of performance. It’s laudable on one level, but the obsession means it's easy to miss the low-hanging fruit,” Bouffler told us.

“What’s more important is the cadence of research; that’s actually where the opportunity is for the scientific community.”

As such, the AWS approach is as much about availability and elasticity as it is performance. With the company’s as-a-service offerings, customers are able to launch their HPC workloads instantaneously in the cloud and scale the allocated resources up or down as required, all but eliminating wastage.

“It’s about creating highly equitable access,” said Bouffler. “If you’ve got the budget and desire to solve a problem, you’ve got the computing resources you need.”

The benefits of such a system have been particularly evident since the start of the pandemic, during which companies like Moderna and AstraZeneca have used AWS instances for the purposes of vaccine development.

According to Bouffler, the world may not have a vaccine today (let alone multiple) without cloud-based HPC, which allowed for research to be kickstarted swiftly and scaled up at a moment’s notice.

“The researchers we worked with wanted flexibility and raw capacity on tap. If you make computing invisible and put the power in the hands of the people with the smart ideas, they can do really powerful things.”

Our data center, our silicon, our rules

Bouffler is the first to admit that the HPC community doesn’t pay a great deal of attention to what’s going on inside AWS. But he insists there is plenty of innovation coming out of the organization.

Historically, for example, cloud-based instances have excelled at running so-called “embarrassingly parallel” workloads that can be divided easily into a high volume of distinct tasks, but perform less well when communication between nodes is required.

Instead of bringing InfiniBand to the cloud, AWS came up with a different way to solve the problem. The company developed a technology called Elastic Fabric Adapter (EFA), which supposedly enables application performance on-par with on-premise HPC clusters for complex workloads like machine learning and fluid dynamics simulation.

Unlike InfiniBand, which fires all data packets from A to B down the fastest possible route, EFA spreads the packets thinly across the entire network.

“We had to find a way of running HPC in the cloud, but didn’t want to go and make the cloud look like an HPC cluster. Instead, we decided to redesign the HPC fabric to take advantage of the attributes of the cloud,” Bouffler explained.

“EFA sprays the packets like a swarm across virtually all pathways at once, which yields as good, if not better, performance. The scaling doesn’t stop when the network gets congested, either; the system assumes there is congestion from the outset, so the performance remains flat even as the HPC job gets larger.”

In 2018, meanwhile, AWS announced it would begin developing its own custom Arm-based server processor, called Graviton. Although not geared exclusively towards HPC use cases, the Graviton series has opened a number of doors for AWS, because it allowed the company to rip out all the features that weren’t essential to its needs and double down on those that were.

“When you’re designing something as big as a cloud, you have to assume things are going to fail,” said Bouffler. “Generally speaking, removing unnecessary features means you have much closer control over the failure profile, and having control over the silicon has given us a similar advantage.”

“Graviton3 is optimized up the wazoo for our data centers, because we’re the only customer for these things. We know what our conditions are, whereas other manufacturers have to support the most weird and unusual data center configurations.”

At AWS re:Invent last year, attended by TechRadar Pro , the company launched new EC2 instances powered by Graviton3, which is said to deliver up to 25% better compute performance and 60% better power efficiency than the previous generation, at least in some scenarios.

There are also a number of HPC-centric features built into Graviton3, such as 300GB/sec memory bandwidth, that typical enterprise workloads would never stretch to the limit, Bouffler explained. “We’re pushing in every direction for HPC, that’s what we always do.”

The more HPC, the merrier

Asked where AWS will take its HPC services next, Bouffler quoted a favorite saying of Jeff Besoz: “No customer has ever asked for less variety and higher prices”.

Moving forward, then, Bouffler and his team will continue to sound out customers and work to offer a wider variety of instances to address their specific needs, with a wider range of hardware options.

Another focus will be on bringing down the cost of running HPC workloads in the cloud. With this objective in mind, AWS launched a new AMD EPYC Milan-based EC2 instance in January called Hpc6a , which is two-thirds cheaper than the nearest comparable x86-based equivalent. Bouffler says AWS did “all sorts of nutty things” to help bring down the cost.

It’s not just about academic and scientific use cases, either. AWS is working with a diverse range of companies, from Western Digital to Formula One, to help accelerate product design, and hopes to expand into a deeper range of industries in future.

“We’re getting HPC into every nook and cranny of the economy,” added Bouffler. “And the more the merrier.”

One of the worst Nintendo Switch ports is getting a complete overhaul

Ark: Survival Evolved, one of the worst Nintendo Switch ports, is getting a comprehensive overhaul as part of the Ark: Ultimate Survivor Edition upgrade, which arrives this September.

According to developer Studio Wildcard, the code for Ark: Survival Evolved on Switch is being completely rewritten from the ground up, and will utilize the latest version of Unreal Engine 4. While Studio Wildcard won’t be handling the port in-house, the dev said it’s contracted a talented third-party developer to revamp the game's functionality, graphics, and optimization.

As well as a complete overhaul of how the game runs on Switch, Ark: Ultimate Survivor Edition includes hundreds of hours of new content, all the expansion packs, new cinematic story content, custom server support powered by Nitrado server networks, and a “young explorers mode” – a new educational mode that lets you discover and learn all about the prehistoric creatures that inspired the game.

Crucially, those who already own Ark Survival Evolved will receive the update for free, and all existing player progress and save data will be maintained after the upgrade. That’s a nice touch, especially considering the state the game originally launched in.

A prehistoric port

So why was Ark: Survival Evolved so bad on Switch? Well, the game has never worked well on consoles in the first place, and even powerful PCs can sometimes struggle to run it. It made the Switch port a curious decision, then, and the port was – to put it bluntly – a disaster.

According to tech experts Digital Foundry , who analyzed the game back in 2018, Ark: Survival Evolved on Switch had “more sacrifices than any Switch port we’ve tested to date.”

Digital Foundry went as far as to say “this is the lowest resolution Switch game, or any recent console game, we’ve ever tested. It’s worse than just about every game on PlayStation 2 , GameCube , Xbox, and the like.”

The frame rate of Ark: Survival Evolved thankfully wasn’t as jarring as the game's cut-down graphics and blurry resolution, though players still had to suffer frequent dips and stutters, as the game rarely hit its 30fps target.

Thankfully, Ark: Survival Evolved is an outlier when it comes to the majority of Switch ports. We’ve seen a number of so-called “impossible ports” on Nintendo Switch , including Doom Eternal , Warframe, and The Witcher 3 defy expectations. Even though graphics and performance tend to be heavily compromised, these ports still provide a comparable experience to other platforms.

It remains to be seen if Ark: Ultimate Survivor Edition will right the wrongs of its initial release, but at least Switch owners who parted with $50 / £50 / AU$ 79.95 should at least feel slightly less ripped off.

Apple's exploring its own version of the invisible headphones we loved from CES 2022

The next Apple AirPods might not be a pair of in-ear headphones at all.

A recently discovered Apple patent application (via Patently Apple ) shows that the company is exploring a new category of wearable audio device, which could become part of the AirPods family of true wireless earbuds and headphones .

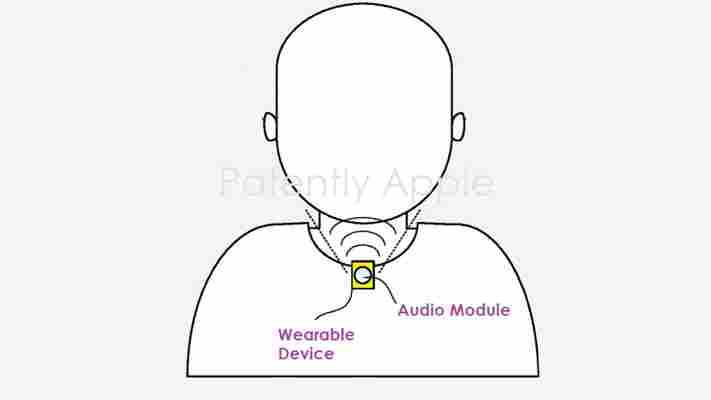

Like the invisible headphones we saw at CES earlier this year, the patent describes an audio module that can direct audio waves to the ears of a user. However, unlike the Noveto N1, which looks like a small soundbar , Apple's patented device can be worn on the clothing of the user, with an image showing how it could be attached to a collar.

To prevent the user's music from disturbing others (and to offer a modicum of privacy), the audio waves would be focused by a 'parametric array of speakers that limit audibility to others'.

The patent application also describes how the device could react to the surroundings or properties of the user, with microphones to detect sound and other sensors. While the application doesn't go into much detail about the kinds of sensors this could include, we can imagine a capacitive force sensor being used to automatically play/pause music when it detects that the device has been removed from the user's clothing.

It's an interesting concept and one that could bring personal music listening on the go to people that don't like the bulk of over-ear headphones or the intrusive nature of in-ear headphones .

As the patent application points out, 'many audio headsets are somewhat obtrusive to wear and can inhibit the user's ability to hear ambient sounds or simultaneously interact with others near the user'.

How likely is a pair of Apple invisible headphones?

It's hard to say whether Apple would actually release the device described in the patent application, but it's important to remember that a patent filing never guarantees that a product will see the light of day.

For what it's worth, we don't think it's super likely. Apple tends to let other companies experiment with new technologies and form factors before launching its own version. The tech giant has a reputation for making devices that 'just work', and committing to an unproven technology could be disastrous if it didn't live up to Apple's standards.

If Apple is trying to get around some of the issues associated with wearing headphones, there are other ways to do it. For instance, people who want to be able to listen to music without shutting themselves off from the world could use wireless earbuds like the Sony LinkBuds , which feature an open driver design that keeps your ears free to hear your surroundings.

In any case, Apple is pretty prolific when it comes to filing patents, particularly those related to personal audio devices. We've seen lots of patents for the next pair of AirPods - the rumored AirPods Pro 2 - which describe everything from buds that can measure your blood oxygen, to truly lossless audio streaming that swaps Bluetooth connectivity for optical pairing.

In other words, we'll believe it when we (don't) see it.